This thread will serve as the main thread for any technical related queries, bug fixes, technical issues for the Mainnet Validator.

You may view the f(x)core repository here.

Possibly I did some a copy-paste error and my validator polluted the network with testnet validators (by mistake) while I was trying to reconfigure persistent_peers (but added the wrong one).

Errors which appears in the logs:

7:43PM ERR dialing failed (attempts: 3): incompatible: peer is on a different network. Got dhobyghaut, expected fxcore addr={"id":"0ea7e81071d4004a1fbbe304477d8ca3183a5282","ip":"54.86.x.x","port":26656} module=pex

7:43PM ERR dialing failed (attempts: 2): incompatible: peer is on a different network. Got dhobyghaut, expected fxcore addr={"id":"cc267dac09a38b67b3bda0033f62678cb54bf843","ip":"18.210.x.x","port":26656} module=pex

7:43PM ERR dialing failed (attempts: 2): incompatible: peer is on a different network. Got dhobyghaut, expected fxcore addr={"id":"d22e741b4e8e2586dbe38fd348d3de8dfbb889a0","ip":"52.55.x.x","port":26656} module=pex

7:43PM ERR dialing failed (attempts: 3): incompatible: peer is on a different network. Got dhobyghaut, expected fxcore addr={"id":"a817685c010402703820be2b5a90d9e07bc5c2d3","ip":"54.174.x.x","port":26656} module=pex

7:43PM ERR dialing failed (attempts: 3): incompatible: peer is on a different network. Got dhobyghaut, expected fxcore addr={"id":"c1a985c7e4c0b5ce6d343d87e070a63b24a76594","ip":"52.87.x.x","port":26656} module=pex

I’ve tried to remove addrbook.json on my validators after they were stopped, but it didn’t work, the network keep adding them back into address book.

Maybe we should ban testnet peers, but I’m not sure how. Or just ignore the errors/issue?

Did you edit your node peers in the configs file after removing the address book? Or rather are you still configured to the test seeds?

And to answer your question about seed_nodes here, if you are already running a validator node, you do not need to enable your seed_node.

With regard to seed-node, its main function is to provide more node addresses to the network, It will record all the nodes connected to it, and as long as you connect it, it will tell you all the node information it records, so you can quickly connect more nodes

The seed node will actively disconnect from you after telling you all the node information, so it is not recommended that the authenticator node enable seed mode

Is it normal for a validator to be missing blocks?

tendermint_consensus_validator_missed_blocks seems to be going up slowly. It’s not too many but I am just wondering if this is of concern or normal?

Yes it’s normal to miss some blocks. However make sure you don’t miss too many blocks

Please refer to the Validator FAQ under “slashing conditions” there’s a downtime explanation. @l4zyboi

When missing blocks, there are generally 2 factors you’ll need to consider. Number of blocks and consistency is missing them. Infrequent misses of one or two blocks wouldn’t be a problem but persistent loss of blocks or a huge number of blocks may signal some issues with your network or some other issues. Do also check your own network’s situation and other nodes network delay.

Yes, what I did was, I’ve corrected the config, shutdown the nodes, removed address book, started nodes again (config was correct), these unexpected addresses still were added (possibly from the upstream FX’s seed nodes).

Thanks for the explanation Richard.

So as long as we are never missed 27.7hrs of blocked back to back we will be fine?

@Richard Got a non-standard configuration question.

Let’s say I’ve got server A and B (for a fallback plan, aka backup). Normally I run a validator on server A, and on B in meantime I’m running a non-validator node (with a different node_key). However during longer maintenance or a crash, I want to temporary move the validator from A to server B to minimalize downtime (so I apply the validator key). I’m aware I need to configure the same priv_validator_key (while A is down), however can node_key still be different on both servers while priv_validator_key is same? Or two keys needs to be the same at any given time when running the validator?

a full node cannot share the same priv_validator_key.json file as your validator node (while both are running at the same time)

- My question is more about node_key, can it be the same or not on 2 different servers?

- I know priv_validator_key cannot be the same, so what are the consequences of using the same priv_validator_key by accident (it’s another question, not related to the previous). E.g. my crashed server goes online, while a fallback/backup validator runs at the same time. Is the network going to jail it, or will just be confused?

My question is more about node_key, can it be the same or not on 2 different servers?

the role of the node_key is for p2p communication. 2 nodes cannot use the same key, this will cause system confusion.

with regards to your question about creating a snapshot from another node

you should create another node, synchronize the data for that node, stop it and then generate a snapshot from that data

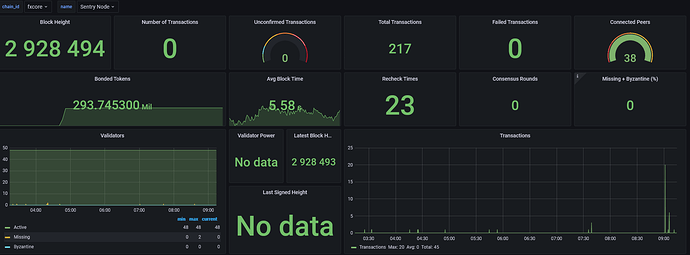

@l4zyboi @cop4200 @ClaudioxBarros noticed you guys were facing some data source issue for the node monitoring device ie Grafana. For anyone here who is going to pull the latest code for Grafana, you might have some configuration/password issues so your dashboard will reflect a 401 error. do reconfigure your passwords or reset it in Grafana.

Heyy Richard,

@ClaudioxBarros already helped me out over telegram.

I think the update was unnecessary the old v8.3.0 was also having a 401 issue which is data source access denied due to wrong credentials…

@l4zyboi @FrenchXCore @FxWorldValidator @ClaudioxBarros @Fox_Coin

to answer some of your questions, not sure if I missed out on anyone here

- Why are blocks missed?

Answer: blocks missed are due to network problems (this may be due to local network issues, too few peer nodes, peer network problems) - How should uptime be calculated?

Answer: It should be calculated based on a rolling 20,000 block basis. for example if I have missed 50 blocks in a 20,000 block window then it will be (20,000-50)/20,000=99.75%

Thing to note here is that we have to pay attention whether the missing blocks are continuous, whether it occurs in a short amount of time (which in case would be more serious). - How do I query a particular block’s signature?

Answer:

fxcored query block 644696 --node https://testnet-fx-json.functionx.io:26657 | jq '.block.last_commit'

if a signature is empty, then a validator has not signed that block

I have one doubt

My local sentry nodes is set as pex=true to not have so many connections, the weird stuff is , it should only connect to 8 max peers due settings but sometimes he as 12 connections, that shouldn’t happen right ?

did you check all the other fields like persistent nodes etc to make sure it is configured correctly?

you may take a look at the explanation of each field and further below, a table of values (summary).

is well config ![]() but is ok now, by miracle he is ok now

but is ok now, by miracle he is ok now ![]()

haha ok. after you have changed your config.toml file, do make sure you restart that particular node to reflect any of the changes

@Richard ,

I was thinking.

What would be the risk of sharing sentry nodes between trusted validators ?

I can’t see any actually…

The only thing is share the ip i think , as someone alredy said.

you are using sentry nodes… they are open , what changes is the configs (PEX)

The risk is that everyone would know your validators IP address…

This can leave you open to attacks and SSH brute forces etc…

I don’t feel comfortable sharing my own validators address for those reasons;

But if someone would like to share their nodes address and wants me to add them to my sentries I have no problem with that.